Recently, Google hosted their Google I/O ’25 Keynote Event that showcased some of the most advanced breakthroughs in artificial intelligence and digital innovation today.

The event was led by Sundar Pichai, Google’s CEO, who shared not only the company’s latest technological achievements but also personal insights about how these innovations are shaping the future for everyone.

This event wasn’t just a regular product launch or keynote—it was a vivid demonstration of how AI is transforming multiple parts of our lives, from self-driving cars to creative media, coding, learning, shopping, and even how we interact with the internet itself.

In this blog, we’ll walk you through everything that was shared and demonstrated, including key announcements. Whether you missed the event or want a detailed recap, this blog will tell you the full story.

Table of Contents

1. AI Mode

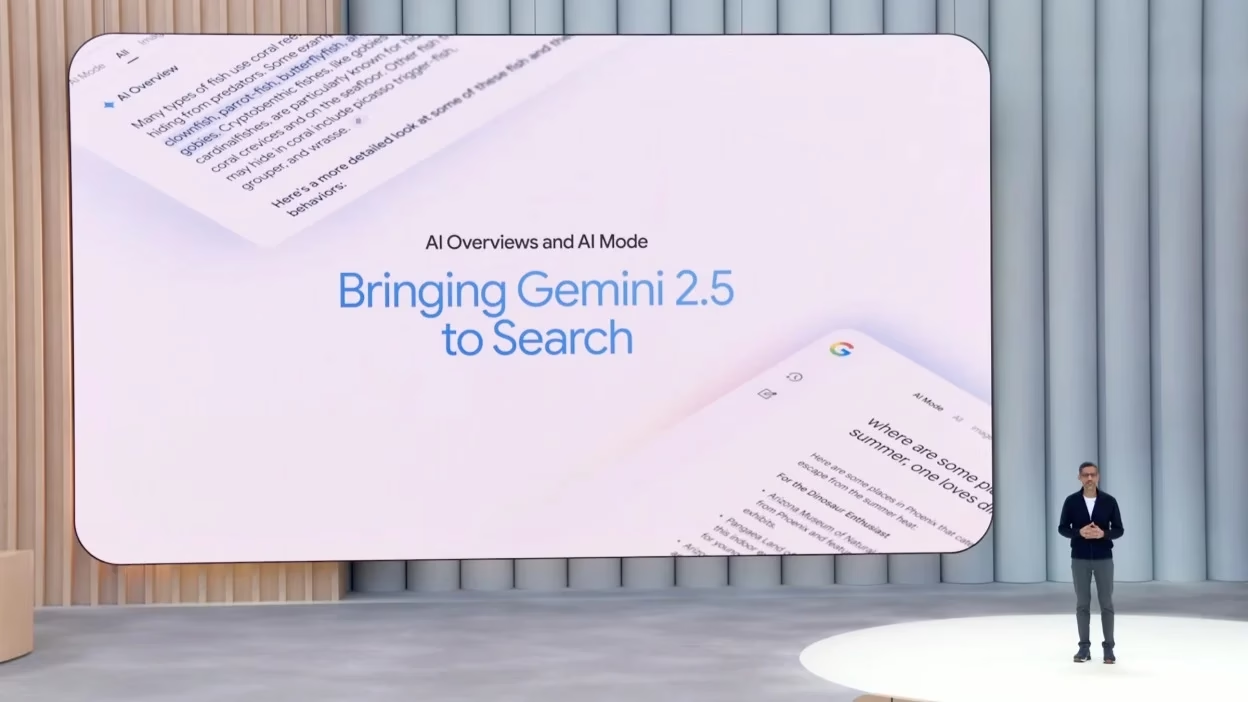

As AI Overviews gained traction, many power users asked for a more complete, AI-first search experience. Since launching, AI Overviews have scaled up to 1.5 billion users every month in more than 200 countries and territories.

Read more – How Is AI Changing The Way We Search?

“No product embodies our mission more than Google Search. It's the reason we started investing in AI decades ago and how we can deliver the benefits at the scale of human curiosity”

Sundar Pichai, CEO, Google

In response, Google began testing AI Mode earlier this year in Search Labs — and now, it’s officially rolling out in the U.S., no sign-up needed.

Building on that line, Google announced AI Mode in the event which is a new, deeper AI-powered Search experience. Now rolling out in the U.S., AI Mode allows for richer follow-ups, multimodal queries, and advanced reasoning, all backed by a custom version of Gemini 2.5.

AI Mode is Google’s most advanced AI-powered search yet, offering deeper reasoning, multimodal understanding, and smarter follow-up capabilities with useful links to the web. Over the next few weeks, users will start seeing a new AI Mode tab in Search and right within the Google app’s search bar.

1.1 How Is AI Mode Changing the Way We Shop Online?

Building on the AI Mode experience, Google is also transforming how we shop online.

Now, when you search for products, you get personalized recommendations powered by Gemini and Google’s massive Shopping Graph — featuring over 50 billion listings refreshed hourly.

Whether you’re looking for the perfect travel bag or a pair of hiking boots, AI Mode helps you browse visually, ask detailed questions, and narrow down results intelligently.

But the real innovation is you can now virtually try on clothes using your own photo due to a new AI-powered image generation model, this “try it on” feature shows how outfits actually drape and fit on your body — just upload a picture and see yourself in that vacation dress or formal shirt instantly.

And once you’ve found the perfect item, a new agentic checkout system lets you track price drops and even complete the purchase for you when the timing’s right — securely and automatically with Google Pay.

This is more than just shopping. It’s an AI-first, seamless, visual experience that makes buying what you love faster, smarter, and more personal.

2. Gemini 2.5 Pro Deep Think

Another of the most inspiring parts of the event was the update from Demis Hassabis, CEO of Google DeepMind. A major announcement about Gemini 2.5 Pro Deep Think was made which is a new mode built to push AI’s reasoning capabilities even further.

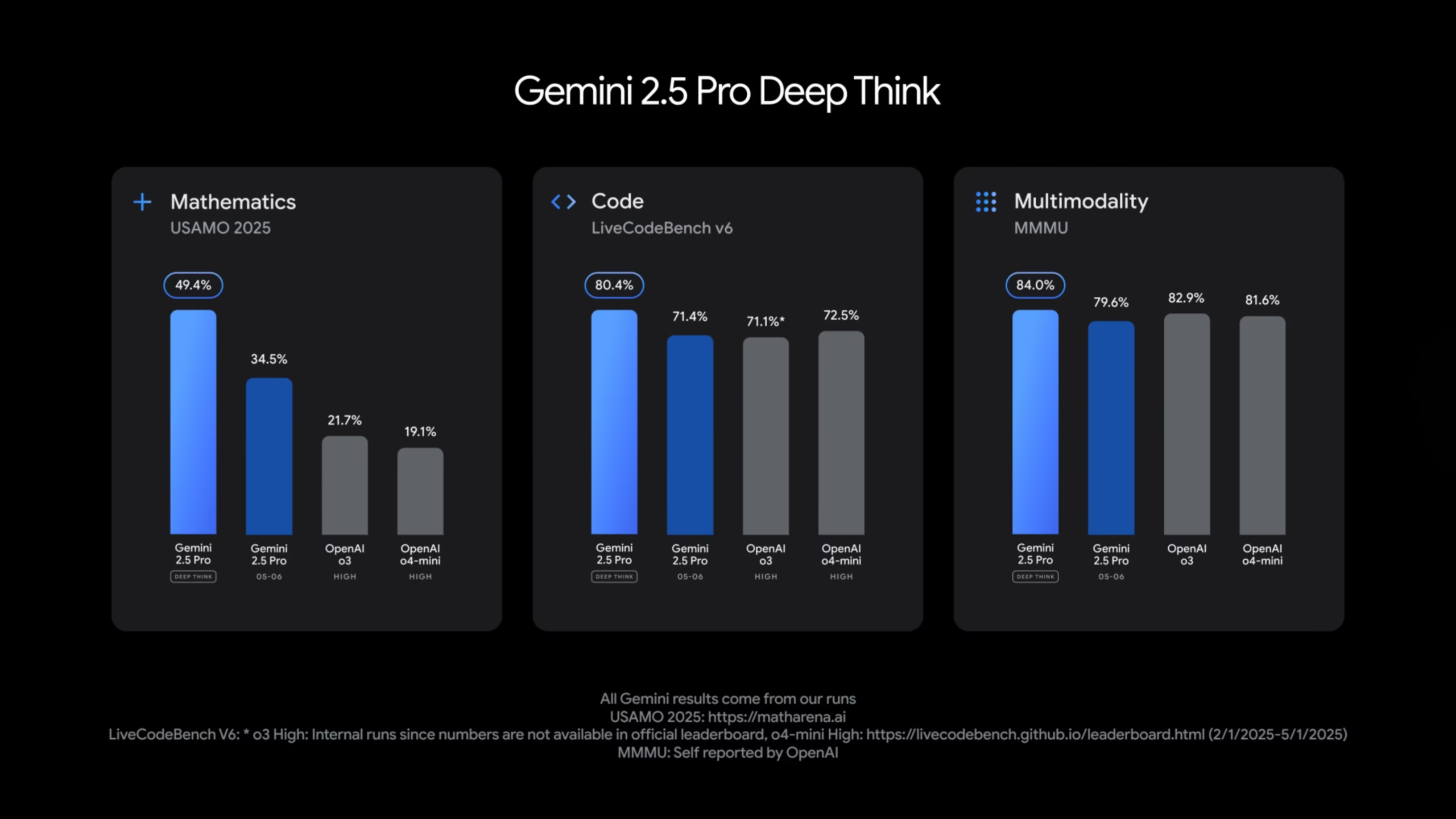

Designed for users who want maximum model performance, Deep Think gives Gemini more time to think, reason, and generate answers — delivering breakthrough results across math, coding, and multimodal benchmarks.

It was said that it outperformed on the USAMO 2025, topped charts on LiveCodeBench, and led MMMU which was a benchmark for multimodal understanding.

Currently, Deep Think is being rolled out to trusted testers to conduct frontier safety evaluations. Once ready, it will be made widely available through the Gemini API.

In the demonstration, it was shown how developers could interact with Gemini by simply stating what they want their software to do. Gemini then writes, improves, and debugs code, drastically speeding up the development cycle.

Demis also shared how AI is already making a big impact in science. Tools like AlphaFold 3 help predict how molecules interact, which is a huge step for biology and medicine.

AlphaProof solves tough math problems, Coscientists work with researchers to test new ideas, and AlphaEvolve helps improve how AI models are built. These tools are now used by millions of scientists around the world — with AlphaFold becoming a go-to tool in many biology labs.

"We’re in an exciting moment in AI. We’ve made incredible progress on our Gemini models in a short amount of time — and we’re just getting started."

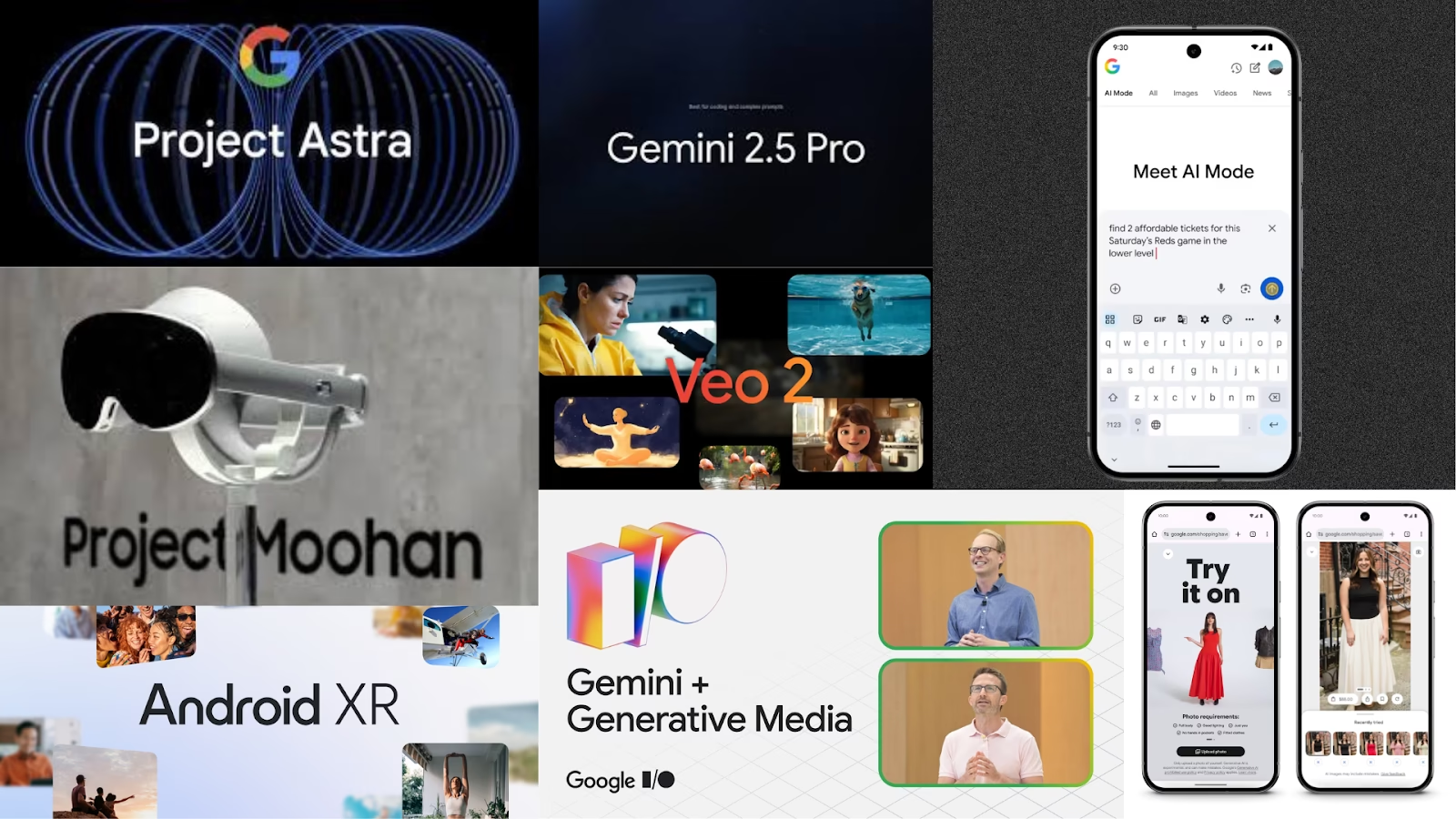

3. What is Project Moohan and Why Is It Exciting?

One of the most exciting sneak peeks at Google I/O 2025 came from Josh Woodward, who introduced Project Moohan — a new immersive XR platform being co-developed by Google and Samsung.

Project Moohan is focused on delivering a next-gen spatial computing experience that combines the best of Android, Samsung hardware, and Qualcomm’s XR chips, all powered by Gemini AI.

This upcoming platform will support hands-free, AI-first interfaces designed for the future of work, play, and everyday life — blending physical and digital worlds seamlessly.

From productivity tools that float in your space to AI agents that understand your surroundings, Project Moohan promises to push the boundaries of what we expect from wearable and immersive tech.

The first hardware device — a new wearable XR headset from Samsung — is expected to launch soon, and developers will get early access with a SDK later this year. It’s Google’s biggest step toward building a truly AI-native XR ecosystem.

4. Generative Media: Empowering Creativity Like Never Before

Jason Baldridge, a researcher at Google spoke passionately about how generative media is transforming the creative landscape. Google is committed to building technology that empowers the creative process, not replaces it.

“Whether you are a creator, a musician, or a filmmaker, generative media is expanding the boundaries of creativity by working closely with the artistic community since the beginning, we continue to build technology that empowers their creative process.”

Jason Baldridge, a research scientist at Google

Jason emphasized that AI acts as a collaborative partner for artists, helping them explore new ideas and speed up routine tasks so they can focus more on their artistic vision.

For musicians, the Music AI Sandbox tool means composing fresh melodies; for filmmakers, quickly visualizing scenes; and for digital artists, experimenting with countless variations of their work. Generative media helps unlock creativity in ways that were previously unimaginable.

At the event, Jason Baldridge also unveiled Veo — Google DeepMind’s most advanced generative video model to date. Veo can create high-quality videos in 1080p resolution, guided by just a simple text prompt. Here’s an example:

It understands cinematic language like camera movements (e.g., time-lapses, aerial shots, and close-ups), visual styles, and scene composition, allowing creators to bring their ideas to life with more precision and realism.

What makes Veo truly stand out is its understanding of physics, lighting, and depth, which helps generate video content that feels natural and consistent — even across longer sequences.

Veo also supports masked editing, meaning creators can modify just specific parts of a video without needing to re-render the whole scene. This gives artists unprecedented control over their output.

5. What’s New with Android XR and Spatial Computing?

At Google I/O 2025, one of the big highlights was around Android XR — Google’s next leap into spatial computing. Sundar Pichai and team announced that Google is building a new Android-based platform for extended reality (XR) in collaboration with Samsung and Qualcomm.

This platform is designed to support immersive experiences across AR (Augmented Reality), VR (Virtual Reality), and MR (Mixed Reality). It will allow developers to build powerful apps that blend the digital and physical world — from interactive 3D environments to real-world overlays.

A developer kit is coming later this year, and it’s expected to power future headsets and wearables, including Samsung’s upcoming XR device. Powered by Qualcomm’s XR chips and Gemini AI, this will allow for natural interactions, voice commands, spatial mapping, and even AI-assisted virtual agents that can respond to your environment in real-time.

6. Conclusion

The technologies introduced at this event mark a turning point. They promise to democratize creation, enhance productivity, and deepen human connection through AI-powered tools.

Whether you are a developer, entrepreneur, artist, educator, retailer, or simply curious about the future, these innovations open new doors. The tools unveiled are designed to empower you to create, innovate, and solve problems in ways never before possible.

As AI continues to evolve, it will become essential to engage with these tools and opportunities to stay ahead and shape the future you want.

For more informative content and blog, follow and stay tuned to DAiOM.

Subscribe to our NEWSLETTER!